Generative AI, SaaS Innovation, Value and Pricing

Steven Forth is a Managing Partner at Ibbaka. See his Skill Profile on Ibbaka Talio.

In 2011 “software is eating the world” (Marc Andreessen in 2011)

In 2023”AI is eating software”

At one level, this means that all software applications are layering in AI.

The obvious example is Microsoft Copilot (if you haven’t already seen this demo take a ten minutes to watch it).

Another example is Hubspot, where Dharmesh Shaw is pretty excited.

One could generate a list of several pages of all of the different ways that AI is now being used in B2B SaaS.

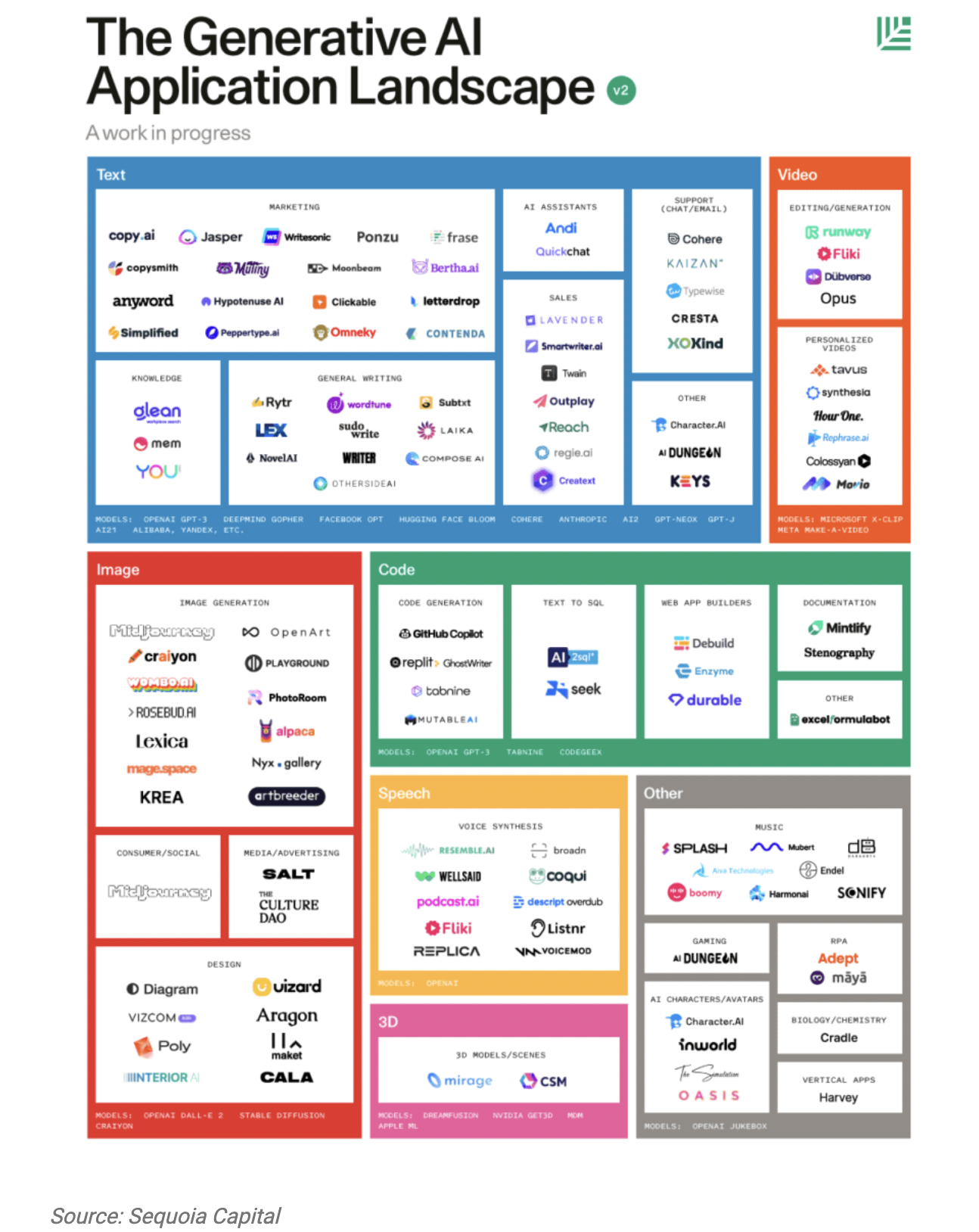

Even the relatively new space of content generation AIs has quickly gotten crowded. (From Sequoia Capital via CB Insights)

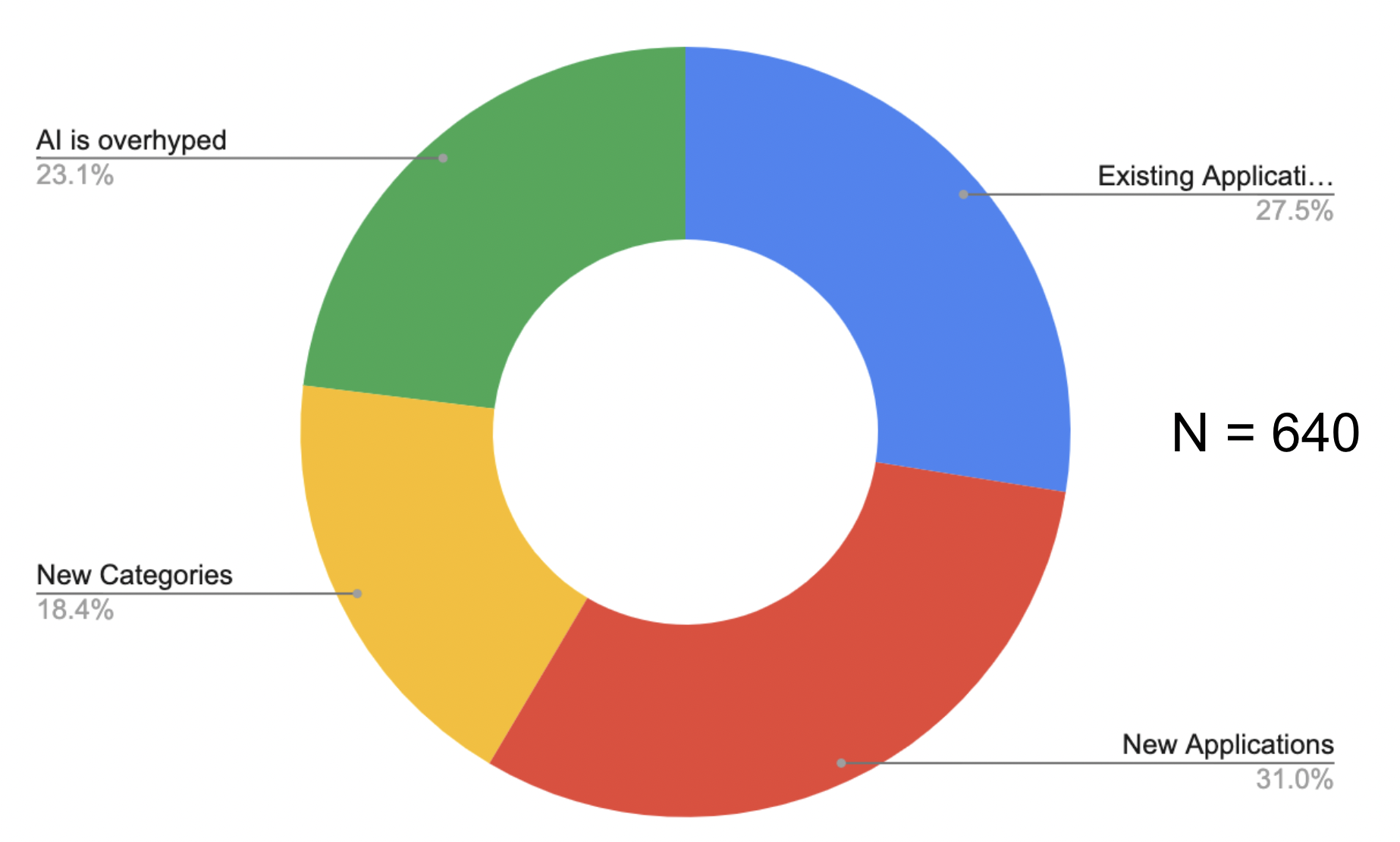

So where will AI have the biggest on SaaS and where will the most value be created over the next three years? We asked the question across a number of LinkedIn Groups (Design Thinking, Software as a Service - SaaS, the Professional Pricing Society and my own personal connections). Six hundred and forty people responded.

The poll choices (LinkedIn limits you to 4) were:

Existing Applications

New Applications

New Categories

AI is Overhyped

As usual there were some interesting comments.

“Most products built around AI (sense, category, prescribe, automate) are perceived as high-touch buyer motions by customers.

Based on that, and to the question .... it greatly depends on the (1) existing hurdles in customer adoption & journey to impact (2) vendor risk profile (3) operational risks vs outcome upside.”—-

“Decide for yourself, here is a prompt I inputed into an AI tool:

"Make a joke about how ai is over hyped"

Here is the output in roughly 5 seconds:

"AI, or artificial intelligence, is all the rage these days. It seems like everywhere you turn, there's a new company claiming to have the latest and greatest AI technology. But let's be real, folks. AI is just a fancy way of saying "computer program."

Sure, it can do some cool things like beat humans at chess or recognize your face in a crowd, but let's not get too carried away. I mean, have you ever tried to have a conversation with Siri or Alexa? They're about as intelligent as a brick wall.

So, let's all take a deep breath and remember that AI is just a tool, not the second coming of Einstein. And if you're worried about machines taking over the world, just remember that they still can't fold laundry or make a decent cup of coffee."

There are several different patterns that have been seen when a new foundational technology gains mass adoption.

Pattern 1: Inside Out - Apply to what we know and build out (the most common)

Use in existing applications

Use in new applications

Create new categories

Pattern 2: Outside In - Create new ground and then backfill

Create new categories

Use in new applications

Use in existing applications

Pattern 3: Bottom Up - Start by solving a specific problem

Use in new applications

Use in existing applications

Create new categories

(The indentation for pattern 3 is intentional, one can go from new applications to disrupting and competing with existing applications or from new applications to category creation.)

We are seeing all three of these patterns emerge simultaneously with Content Generation AIs.

Microsoft Copilot is an example of Pattern 1 - Inside Out, use first in existing applications, albeit at a massive scale. In this case the pricing tactic is to monetize through increased market share and possibly through some price increases to the MS 365 family. Google will be forced to respond to the Microsoft Open.ai partnership by putting its own LLM to work in Google Workspace.

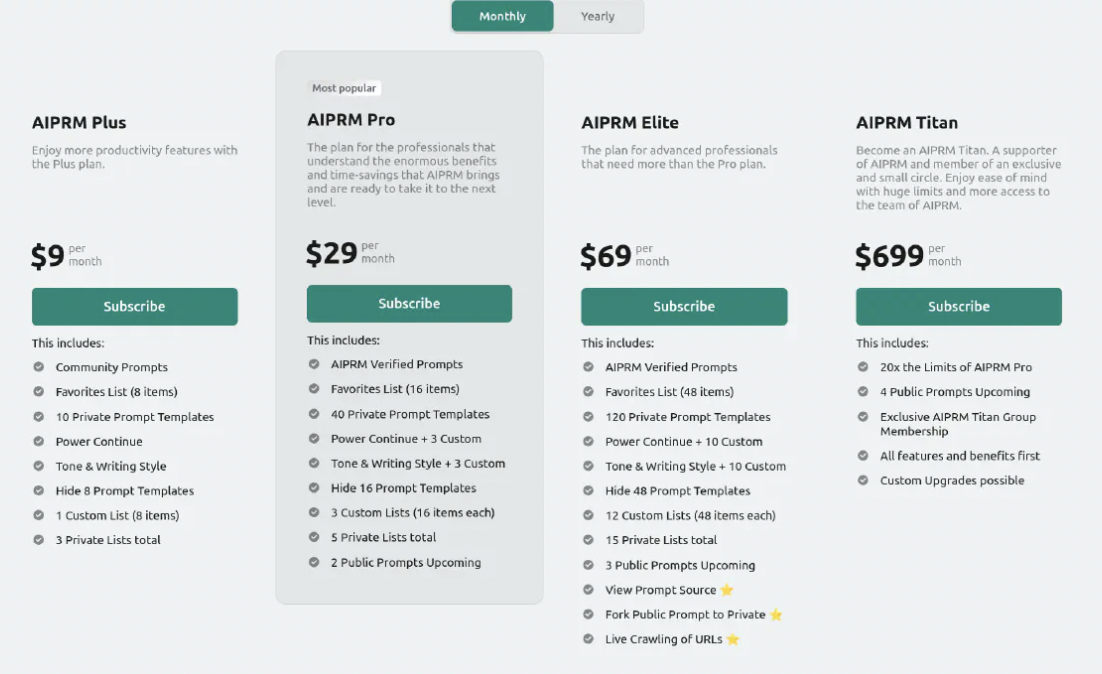

An example of Pattern 3 - Bottom Up, category creation, is the rapidly emerging market for prompts and prompt management tools. In a few short weeks we have seen a proliferation of prompt marketplaces from the original PromptBase to sites focussed on SEO and marketing like AIPRM. AIPRM has a pricing page.

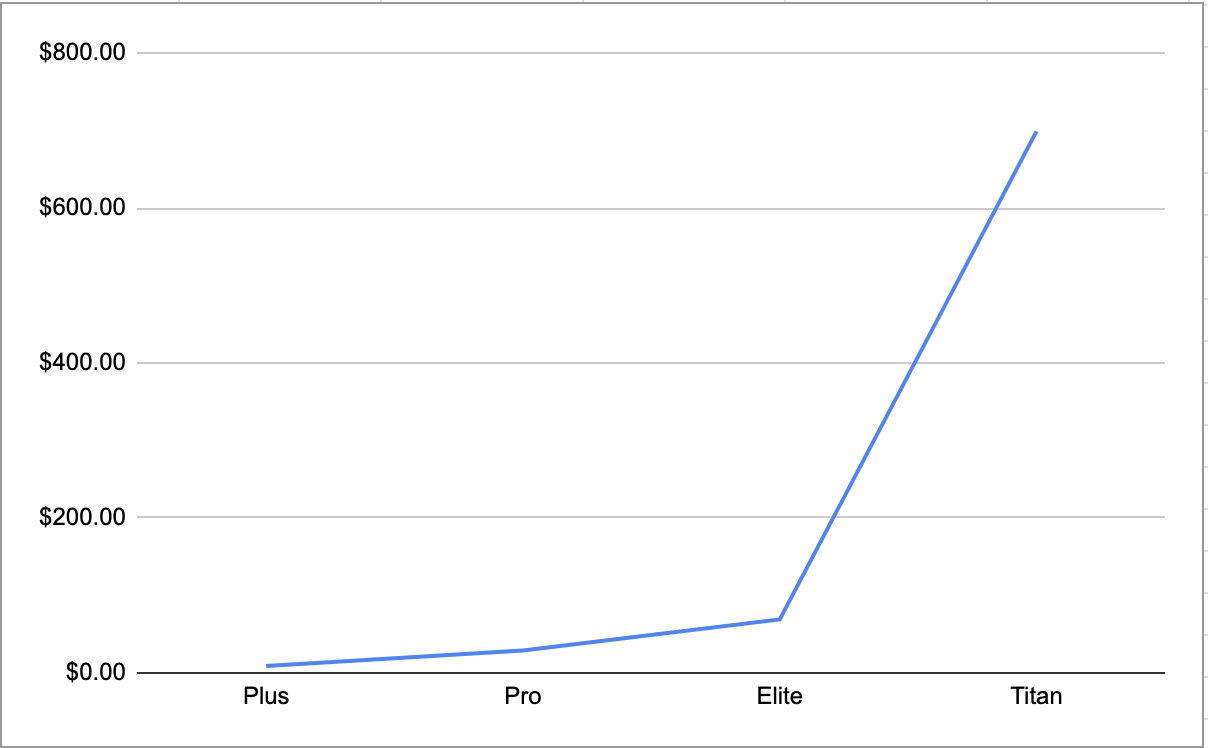

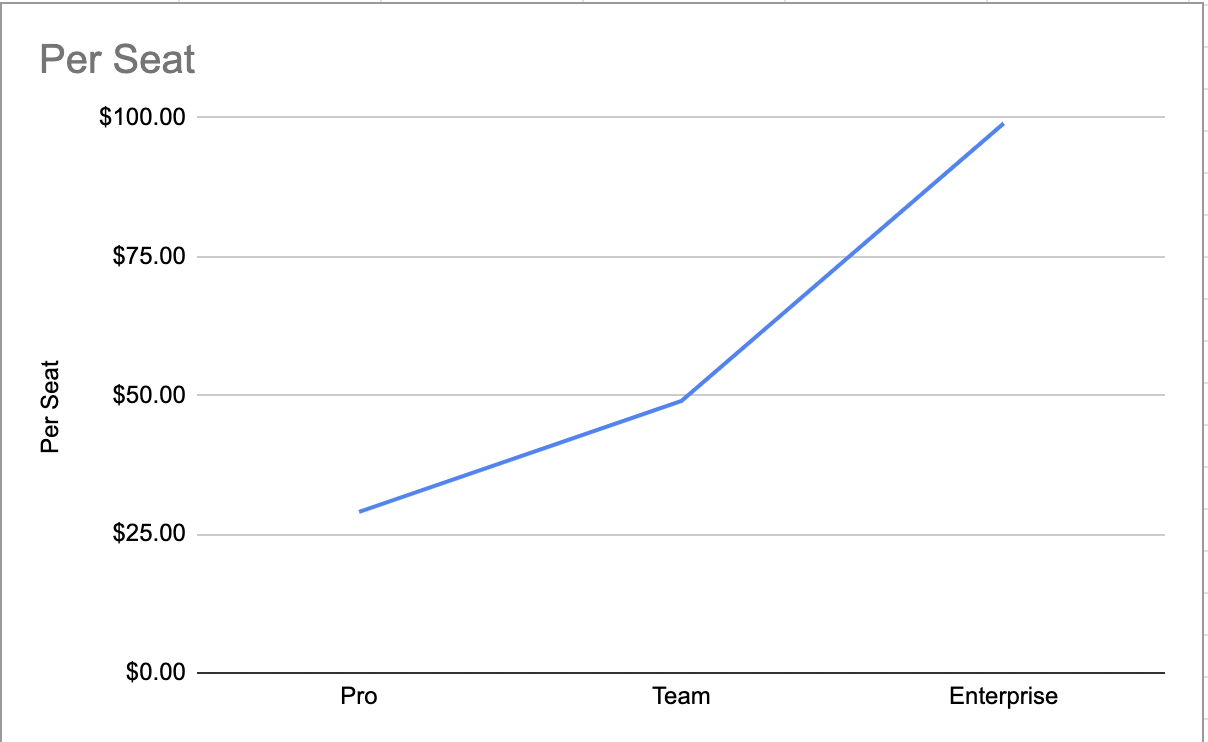

Note the deep convexity of the package pricing curve, suggesting that most of the market is at the smaller scale with only a few early adopters willing to pay $699 per month.

Pattern 2 - Outside In, create new applications in existing categories, is also showing up, with a lot of the activity being seen in the Martech category. An example here is Copymatic, which offers an AI to perform copywriting services.

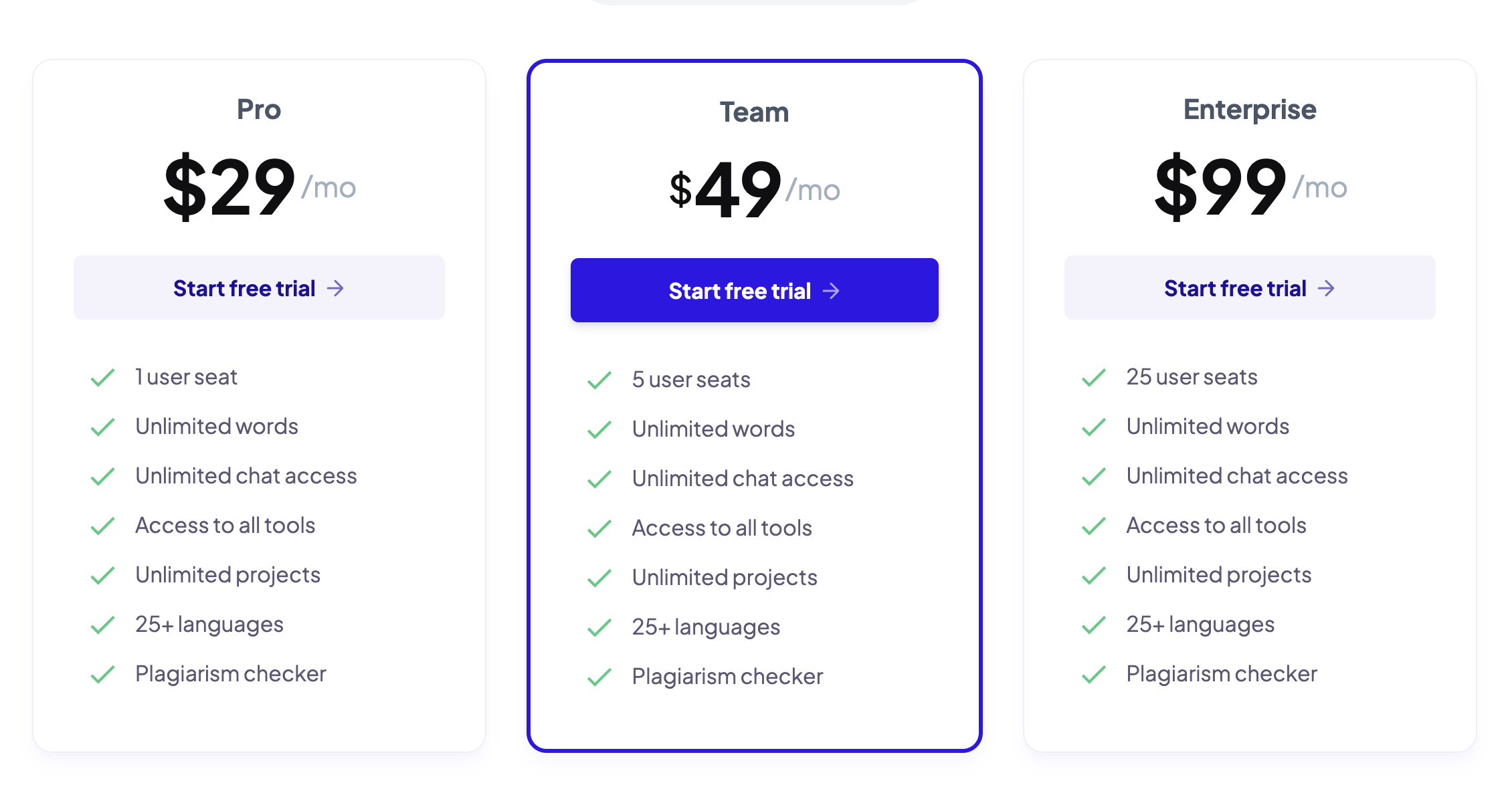

The pricing is per seat. (Is this the best pricing metric here?)

The package pricing curve is convex, but not as extreme as AIPRM.

Given all this activity, we need a framework for understanding how all the pieces of the AI Ecology fit together and how they will be priced.

One can map from the structure of the AI ecology to value and pricing metrics.

As always, one wants to begin by building a value model and then deriving the pricing model.

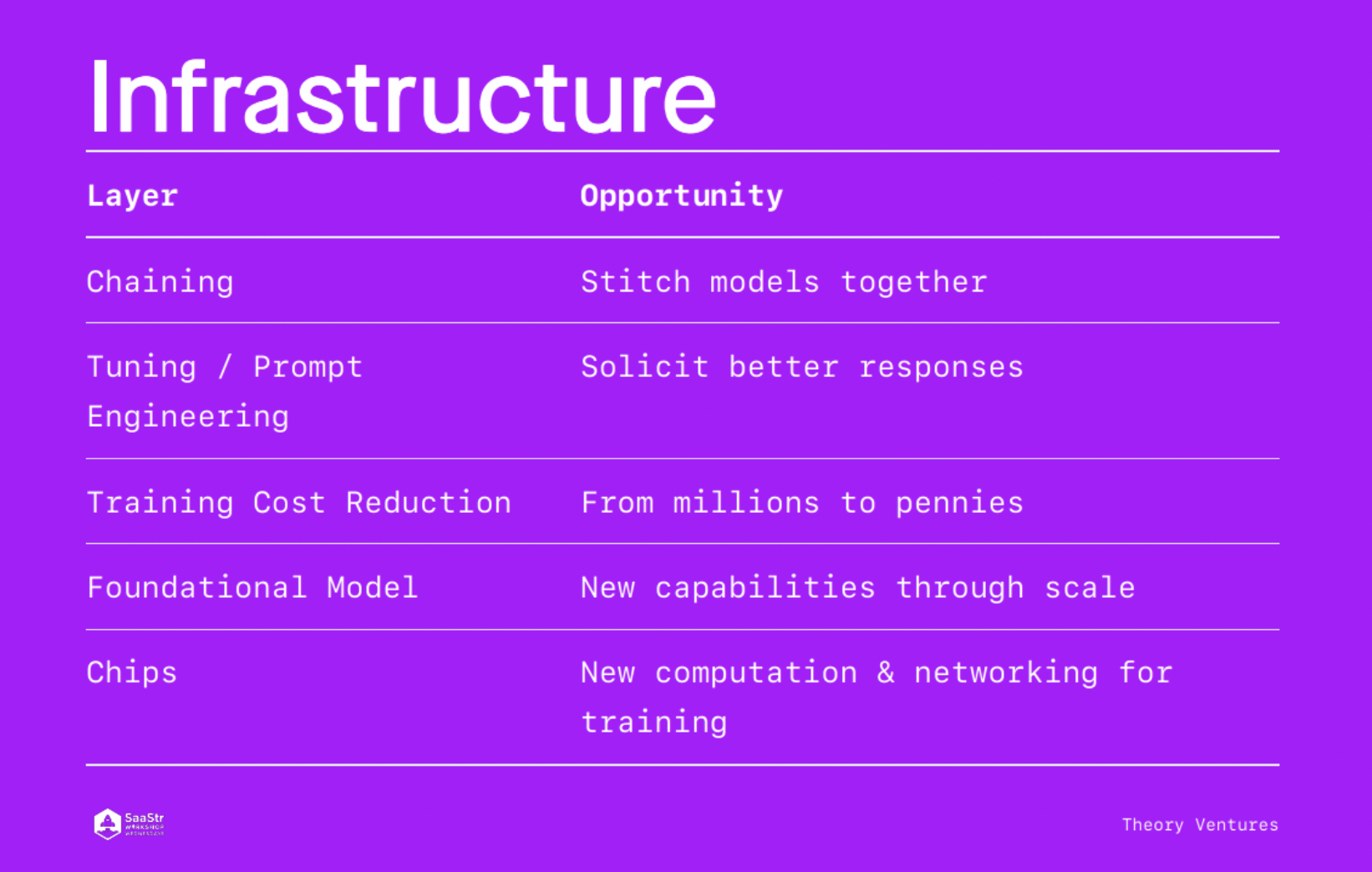

Another recent attempt to bring some order to the AI commercial environment comes from venture capitalist Tom Tunguz, now at Theory Ventures.

In an interview with SaaStr - The Impact of Generative AI on Software - he proposes the following way to organize AI infrastructure.

He goes on to ask some basic questions that anyone who want to pitch Theory Ventures should be able to answer.

What layer of the stack will you build in?

What market will you pursue?

What moat will you create?

What level of technical depth are you comfortable with?

The answers to each of these questions will also impact how you think about pricing.

What layer of the stack will you build in?

The layer is closely associated with the value metric and therefore the pricing metric. There will be standard value metrics used to evaluate each layer and these should be used in pricing.

What market will you pursue?

It is more than just ‘what market’ but whether one is creating a extension to an existing product, a new product or building a new category. This will have a big impact on how one thinks about the value metric and the pricing metric.

What moat will you create?

Simple applications built on third party models will be easy to replicate. They have very shallow moats. There are several ways to build moats in this space.

Build your own model (which may cost a very large amount of money)

Augment a model (this will be a common approach)

Combine models (and provide the tooling to do this)

Support a process (many new processes are emerging, like those associated with prompt engineering)

Add interpretive tools to make the outputs easier to understand

Add tools and integrations that support actions prescribed by the AI

Track outcomes and feed them back into the system to support continuous improvement

What level of technical depth are you comfortable with?

AI is technical, even when you are just entering prompts into an LLM and processing the outcome. Most of us will not be developing large language models ourselves, these things will soon cost billions of dollars to build, optimize and operate, and will contain trillions of parameters. But we will need to understand prompt engineering, probability, natural language processing, parametric design and so on. The key will be to apply deep domain knowledge to AI platforms and to orchestrate the workflow. This will require technical knowledge.

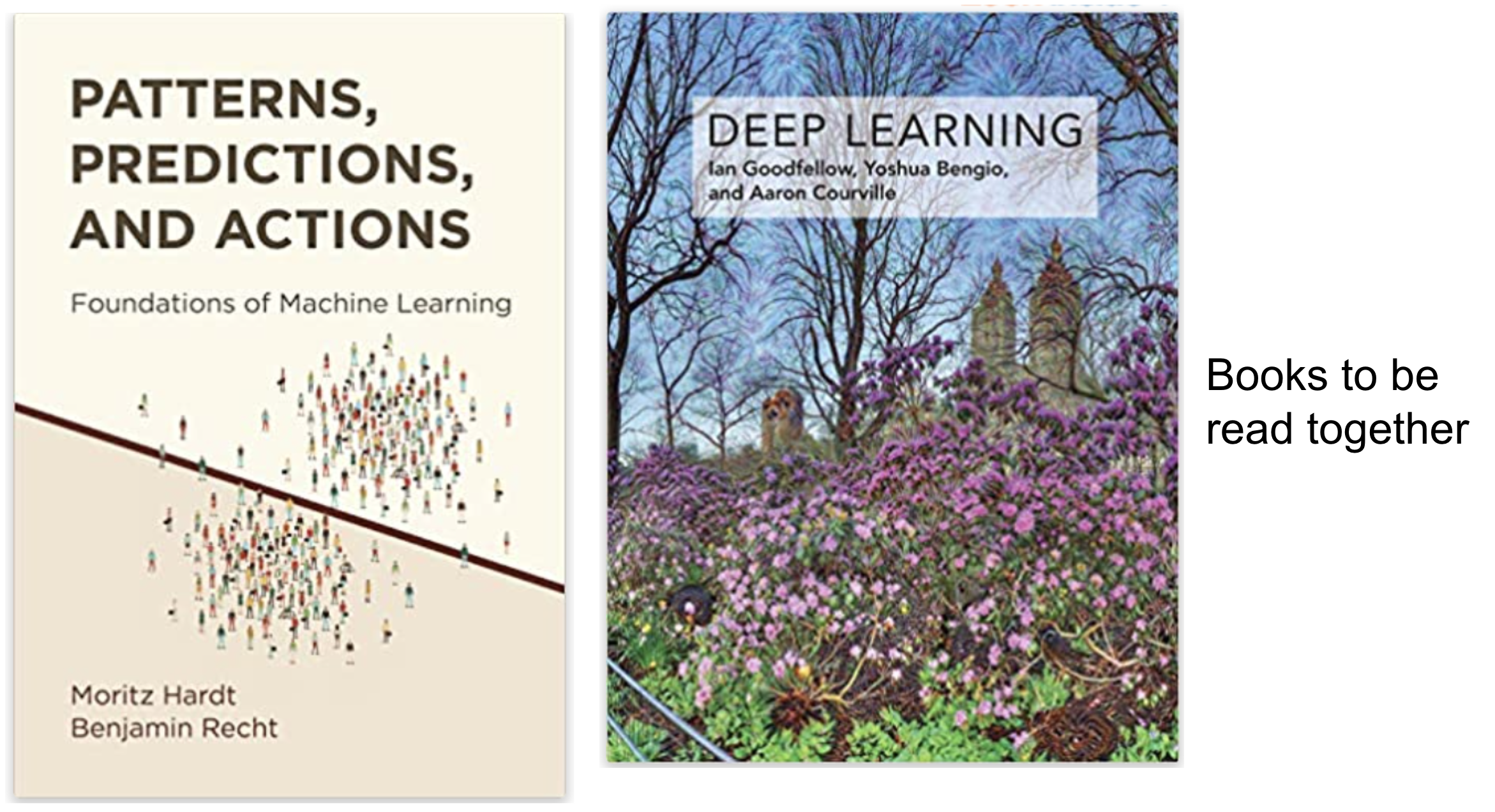

Gathering a good grounding in AI and machine learning is important. And the math used is pretty straightforward compared to many other technical fields (try statistical fluid dynamics for a mind stretch). Two books I used to develop my own knowledge were

Patterns, Prediction and Actions: Foundations of Machine Learning by Moritz Hardt and Benjamin Recht (Princeton, 2022)

Deep Learning by Ian Goodfellow, Yoshua Bengio and Aaron Courville (MIT Press 2016)

AI will rewrite the software business as we know it, including how we understand value and use it to price. The model driven approach to pricing will accelerate rapidly, fed by AI generated models. Exciting times.