How OpenAI prices inputs and outputs for GPT-4

Steven Forth is a Managing Partner at Ibbaka.

See the update: Pricing thought: OpenAI will price reasoning tokens in o1

Many companies are looking at how to use Open.ai’s GPT-4 to inject AI into existing solutions. Others are looking to build new applications or even whole new software categories on the back of Large Language Models (LLMs). To make this work we are going to need to have a good understanding of how access and use of these models will be priced. Let’s start with the current pricing for GPT-4. It is possible that this pricing will shape that of other LLM vendors, at least those that provide their models to many other companies. Or pricing may diverge and drive differentiation. It will be interesting to see and will cannalize the overall direction of innovation.

As I write, in April 2023, GPT-4 is available in two packages and has two pricing metrics.

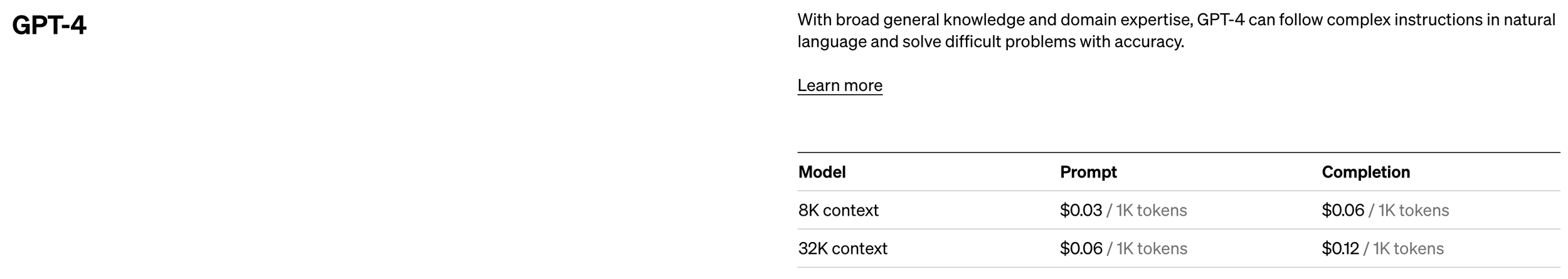

The pricing page is shown below. In addition to GPT-4, Open.ai publishes pricing for Chat, InstructGPT, model tuning, Image models and Audio models. Many real world applications will combine more than one model. The interactions between the different pricing models will be important, but let’s start with the basics.

Before diving into the pricing, one needs to understand the key input metric, tokens. Tokens show up all over the place in LLMs and ‘tokenization’ is the first step in building and using these models. Here is a good introduction.

A token is a part of a word. Short and simple words are often one token, longer and more complex words can be two or three tokens.

It can get more complex, but that is the basic idea. Open.ai bases it price on tokens. This makes sense at this point. It allows them to price consistently across some very different applications. Other companies could learn from this. One place to start to think about pricing metrics is at the atomic level of the application, whether this is a token, an event, a variable or an object and its instantiations.

Open.ai has two packages. They are based on ‘context,’ 8K of context and 32K of context. Generally more sophisticated applications solving harder problems will need more context.

The pricing metrics are the Input Tokens (in the prompts) and the Output Tokens (the content or answer generated).

For the 8K and 32K contexts, the price is as follows:

Input: $0.03 (8K) or $0.06 (32K) per thousand tokens

Output: $0.06 (8K) or $0.12 (32K) per thousand tokens

Note that outputs are priced twice as high as inputs. This may encourage more and larger inputs (prompts), and more elaborate prompts will often provide better outputs.

Why did Open.ai choose to have two different pricing metrics? The two pricing metrics. sound very similar.

The number of tokens input and the number of tokens output could be very different.

Scenario 1: Write me a story

Prompt “Tell me a fairy tale in the style of Hans Christian Andersen where a robot becomes an orphan and then falls in love with a puppet.” (Try this, it is kind of fun.)

Here the prompt has about 20 tokens but the output could have several thousand tokens.

Scenario 2: Summarize this set of reports into a spreadsheet and calculate the potential ROI

Prompts “Five spreadsheets with a total of 20,000 cells and two documents of 10,000 words each.” This works well too, but needs good input data, well structured spreadsheets and a rich data set.

Here the set of prompts would have about 30,000 tokens but the output would have only, say 1,000 tokens.

Assuming both are on the 32K package, the price for each scenario would be …

Scenario 1: $0.001 + $0.30 = $0.301

Scenario 2: $.90 + $0.06 = $0.96

These may sound like small usage fees, but there are companies that are planning to process millions of prompts a day as they scale operations. It adds up.

The two pricing factors interact in interesting ways across different use cases. In many use cases, the output will drive the pricing, but in others, like scenario two, and many other analytical use cases, it is the inputs that will drive the price.

Things to think about. This may be an effective pricing model. One would need to build a value model, a cost model and process a lot of data to really know, but it seems like a good place to start. I assume OPen.ai is doing a lot of this analysis over the next few months and I expect the pricing to change. They are likely using AI to support this analysis and I hope they share their approach.

One reason this model works is because it uses two pricing metrics. Using two pricing metrics, also known as hybrid pricing, is a key to having flexible pricing that will work at different scales and in different scenarios. Most SaaS companies should be considering some form of hybrid pricing.