AI Pricing: What does Box pricing tell us about AI pricing trends?

Steven Forth is a Managing Partner at Ibbaka. See his Skill Profile on Ibbaka Talio.

The cost of operating (not building) Large Language Models is emerging as a real issue in AI pricing and is changing the basic assumptions about SaaS business models. See AI Pricing: Operating Costs will play a big role in pricing AI functionality.

One can see this at work in how Box is pricing Box AI. See Box unveils unique AI pricing plan to account for high cost of running LLMs.

Box AI is positioned around ‘unlocking the value of enterprise content.’ Many companies store their content on Box or similar systems and this puts them in a good position to add that content into the language model while keeping the content proprietary. This is necessary for many companies. There are several architectures that support this (for one example see AI Pricing: Will the popularity of RAGs change how we price AI?. Here is how Box positions itself.

This makes good sense. I want to use my proprietary content to get more relevant responses from my AI. But how should I pay for this?

Box has taken an approach that blends per user pricing and an enterprise pool. Each user gets 20 credits that they can use for any function that calls Box AI. This could be creating content in Box Notes or asking questions about specific documents. Once an individual user has used all of their individual credits they can dip into a pool of 2,000 AI credits available to the company. It is not clear whether unused individual credits can be added back into the enterprise pool but that would make sense. It would encourage use by power users and ensure that the enterprise buyer was getting what they paid for. And the enterprise pool could scale with the number of users, so that, say, 2 credits were added to the enterprise pool for each user with 20 credits. One could also look at having a scaling discount. Box would need to be careful about this though, as there are hard operating costs each time a user executes a credit.

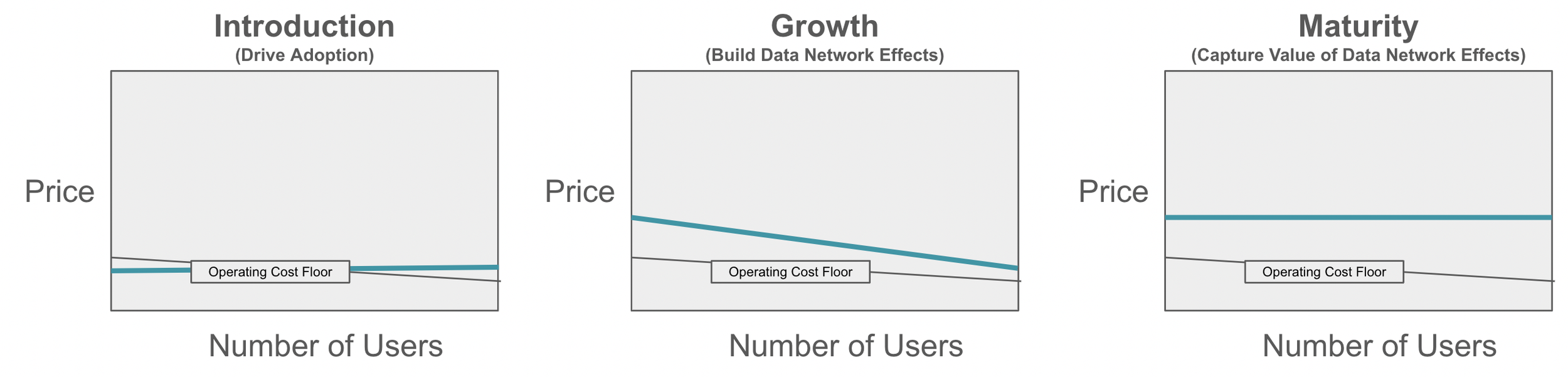

Many people assume that prices will go down with volume and that as more and more users are added the price per user will go down. This may not be the case for AI based applications. Unlike applications running relational databases (the vast majority of B2B SaaS applications), where the cost of each action is minimal, current generative AI applications consume a lot of processing each time they are asked to do something. This is likely to go down over time, but I doubt it will ever get to anything close to what we see with conventional applications.

At the same time, the more users on a system contributing content the more valuable the system. In How Network Effects Make AI Smarter by Sheen Levine and Denkar Jain (from back in March 2023 on HBR). This is referred to as data network effects (or data-driven learning). If users bring content to an AI platform and the platform uses that content to enhance the language model, then there is a data network effect at work. The same is true if the users grade or otherwise provide feedback. This can be used to train the model.

One of the challenges in pricing generative content AIs is to balance the value brought in by users through data network effects with the cost of operating (not building) a generative AI application. The desire to have more users contributing more content pushes prices lower while the operating costs set a floor to responsible pricing. At the same time, the value goes up with the number of users (the network effect) and at some point it makes sense to start increasing prices to reflect the growing valu. One will likely see pricing curves evolve over time.

Companies pricing generative AI applications will want to find flexible design that balance the three forces. This is what Box has done.

Account for operating costs (which do not have the same economies of scale that SaaS businesses assume)

Build value by leveraging data network effects (this means your systems are generating more and more value and that you are not completely reliant on the large LLM vendors)

Model value and capture that value in pricing as the value grows

Read other posts on pricing design

AI Pricing: Will the popularity of RAGs change how we price AI? [This post]

AI Pricing: Operating Costs will play a big role in pricing AI functionality

AI Pricing: Early insights from the AI Monetization in 2024 research

What to price? What to optimize? How to optimize? Three key pricing questions

Pricing under uncertainty and the need for usage-based pricing

Enabling Usage-Based Pricing - Interview with Adam Howatson of LogiSense