Pricing AI content generation

Steven Forth is a Managing Partner at Ibbaka. See his Skill Profile on Ibbaka Talent.

Pricing and Innovation

A series of three posts on why and how value and pricing experts can get involved in innovation.

Part 1 When should pricing get involved in innovation

Part 2 Pricing innovation and value drivers

Part 3 Pricing AI content generation (this post)

Pricing AI content generation

In the first two posts in this series, we provided some framing for how pricing and value management can contribute to innovation. Let’s look at a concrete example, AI content generation. There has been a lot of investment into content generation AIs and in the summer of 2022 there was an explosion in applications and interest. With interest came use. With use came a rapid improvement in quality. Now people have started to ask ‘How can these things make money?’

Generative adversarial networks and AI content generation

Over the past two years AI content generation has taken off and is now being used to write articles, compose music, lay soundtracks onto videos and, over the past few months, to generate images from text strings. In this post, we focus on the last of these, AI image generation, to see how one might monetize these hugely popular apps.

The Parallel Hypothesis:

If one can use AI to describe or categorize a piece of content you can use AI to generate similar content.

Some people have been surprised that deep learning AIs are so good at content generation, but this is part of their underlying logic. An example of this is GANs or Generative Adversarial Networks. A GAN is two AIs that compete with each other, one to generate content and one to tell ‘real’ content from ‘fake’ content.

The Generator generates content, its job is to fool another AI whose job it is to discriminate real images from images created by the Generator, this second AI is the Discriminator. In a GAN, the Generator and Discriminator compete with each other and both get better.

So one could have as a ‘real image’ something like ‘paintings of fruit by Cézanne’ and challenge the Generator to create images that would fool the Discriminator. Here are eight images, four actual paintings, four generated by Dall-E2, one of the most popular AI image generators. If you haven’t yet played with Dall-E, give it a try (the name is supposed to make you think of the artist Salvador Dali and the robot WALL-E from the Pixar movie).

The top are the originals, the bottom are generated by the AI.

AI content generation already exists for

Text - everything from poetry and novels to opinion pieces and technical reports

Translations - between languages and the creation of new languages

Images - what we will focus on here

Music - of many styles and emotional tones

Images for Music - static and dynamic

Music for Images

Music for Video

3D forms (using 3D printers for output)

This list will expand to anything where one can apply a GAN, and commercial applications of GANs are growing rapidly. They range from healthcare (X-ray analysis) to finance (fraud detection), to design (assumption testing). This is a rapidly developing field that is rewiring how we design software and conduct business.

Some of the other companies in AI image generation include

Dreams, Surrealism, breeding and generation - we are in a different reality.

Growth of AI generated content

Dall-E has shown incredible growth over the past few months. By October there were more than 2 million images being generated a day. That would be about 60 million images a month or 720 million a year. The annual rate is likely to be well over a billion images a year by the end of 2022, and this is just from Dall-E. In other words, Dall-E is rapidly emerging as one of the most active sources of new images in the world. Another AI image generator, Stable Diffusion from Stability.ai, is expecting the number of images it generates a day to pass one billion (yes, with a B) at some point in 2023.

Given this enormous growth it is worth asking how value is being created, who it is being created for, and how that value could be monetized. Operating at this scale and delivering a great UX at scale costs money, a lot of money. According to Crunchbase, $1 billion has been invested in Open.ai with the lead investors being Microsoft, Khosla Ventures and the Reid Hoffman Foundation. These investors will want a return at some point.

How Open.ai is pricing Dall-E

Open.ai and Dall-E do have a pricing model. They are monetizing both the API and image generation by users.

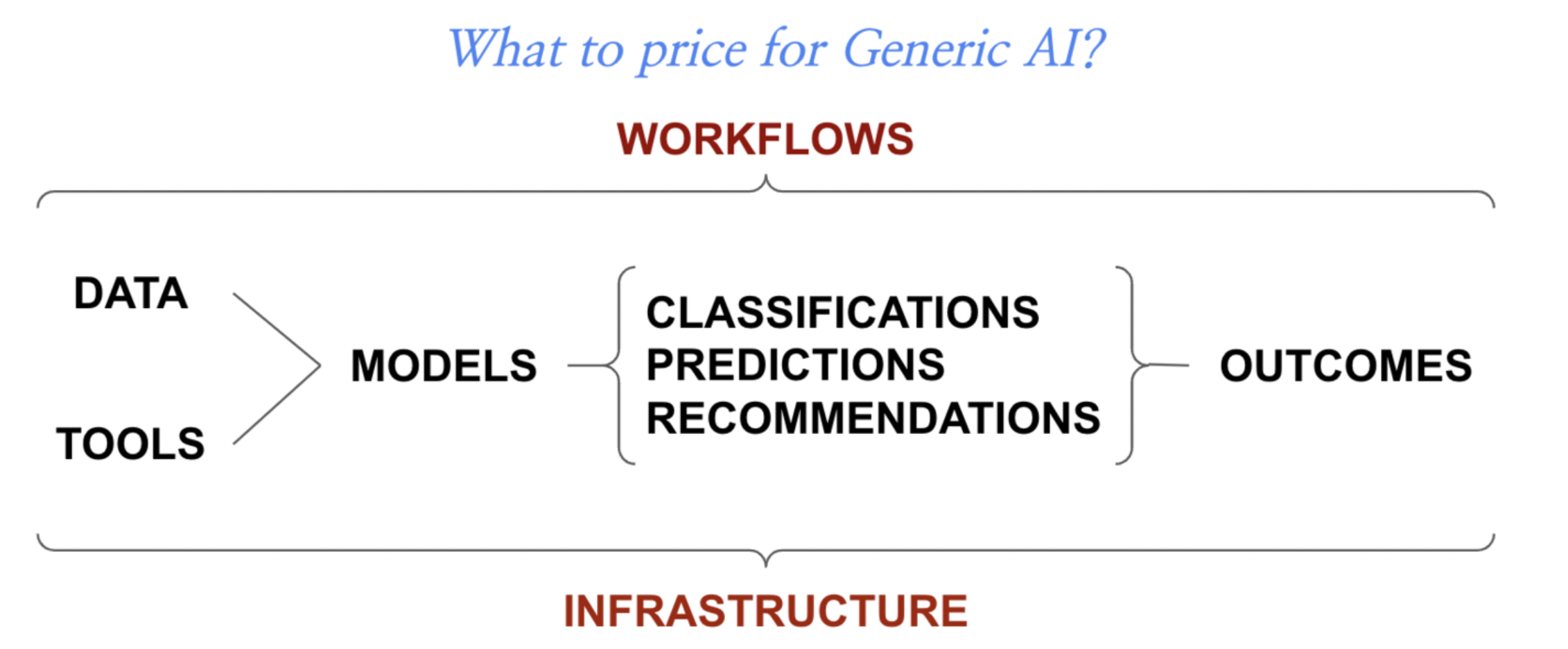

The standard pricing models for AI services come in six forms.

Resources - the cost of resources or infrastructure used to provide the service (deep learning AIs are computationally expensive and run best on hardware and software architected for their specific performance requirements, this is one reason for the sharp increase in demand for Nvidia and other graphic chip makers)

Inputs - the data used for training, the number of training runs (providing data for deep learning is an emerging business in its own right); the amount of data that will be consumed by the AI once it is in production

Complexity of the model - number of layers, degree of back propagation, advanced models like Generative Adversarial Networks and so on

Outputs - number of models, number of applications of model (classifications, predictions, recommendations)

Workflows - number of workflows using the model, complexity of the workflows

Performance - accuracy of classifications, predictions, recommendations (i.e. do people act on the recommendations)

(From: How would you price generic AI services.)

Dall-E is priced using an output pricing metric. One can embed Dall-E in an application and then one pays per image generated, with the price per image tied to image quality. Given the rate at which people publish images on these platforms that could generate significant revenue. See below for thoughts on who could get value from embedding Dall-E and how.

The underlying language models used in Dall-E and other Open.ai solutions are also available, in this case the pricing metric is tokens. A token translates words and a measure of complexity into a processing unit. This is an input metric.

Recently, Open.ai has started to gate this service so that one gets 50 free image generations in the first month and then 15 per month thereafter. If you want to generate more than this per month you will need to start paying. At present (Nov. 5, 2022), the price is about US$0.13 per image. At 2 million images per day, if fully captured, that would be $260,000 per day or just under $95 million per year.

A marketplace for Image Generation AI prompts

Before we go on to look at the value of AI content generation apps, there is another interesting secondary trend. PromptBase is a marketplace to buy and sell the prompts used to generate images.

I doubt that anyone is getting rich from this. At least not yet.

But if use expands, then who knows. And if it becomes a major revenue source I expect that platforms themselves will want to control these marketplaces.

What is the value of AI image generation?

Ibbaka is deeply rooted in value-based pricing. So before we ask about how to price or how much to charge we ask about value.

Who is value created for?

How is the value created?

How much value is created?

How else could the value be created?

How much does it cost to create and deliver the value?

How much does it cost the customer to get the value?

Can we answer these questions for AI image generation platforms like Dall-E?

Not yet.

We are in the early days of category creation here. In category creation the critical first step is to get people to a shared belief about how value is being created.

Given the early stage we are at with this category, Open.ai’s approach of giving people an API to embed in their own application and then to charge for outputs and resolution of the output makes a lot of sense. But this cannot be the endpoint. Eventually Open.ai, Stability.ai and their competitors will need to lead the conversation on value.

Content generation is just plain fun

One value proposition here, an emotional value driver, is that using these systems is fun and makes people happy. I spent a couple of hours recently with my granddaughters (ages 9 and 11) coming up with prompts and laughing at the results. The nine year-old was trying to get Dall-E to draw a purple Alicorn, a unicorn with wings like Pegasus. Dall-E did not seem to know the word ‘alicorn’ (it responded with images of acorns) but with trial and error she was able to get what she wanted, at which point she switched from image generation to image editing).

The value to designers

One group concerned with the potential impact of AI content generation is professional designers, the people who get paid to create illustrations. I asked the Design Thinking group on LinkedIn for their thoughts.

As is often the case, the comments were more interesting than the poll results, especially this one.

This has increased the amount of work I do.

Easy-to-use platforms have allowed non-designers to share their ideas. This created more ideas and buy-in of those ideas. My colleagues realize there is so much more thinking/expertise/questions, and they bring me in because I can solve problems or they don't have time to work on it.

I love these tools because they can show me what their ideas. Works in vice-versa as well. It's improved our collaboration.

Another benefit: people identified gaps without me pointing them out. They found their own answer to "well, why can't we just {insert idea}?" Some appreciated what I do even more.”

Creative Director in US

What are the economic value drivers?

Conventionally, economic value drivers are organized into six categories:

Revenue

Cost

Operating capital

Capital investment

Risk

Optionality

(There are other ways to organize value drivers, see How to organize an economic value model for use in pricing design.)

Having discussed these applications with a number of people in the design and content marketing space, here are some early ideas of how the economic value drivers could play out. The relevant value driver categories are Revenue, Cost and Optionality.

Revenue

There are likely some direct ways to impact revenue. We know that images (and video) have an impact on both SEO and engagement. Dall-E could be set up to generate images to optimize SEO for specific search terms. It is fairly easy to get from this to a revenue value driver. One could go farther and start optimizing the images that drive conversion across the pipeline or that accelerate pipeline velocity. There are well developed value drivers for this as well.

There is also the opportunity to sell the images created (see below) and Open.AI could participate in that revenue.

Cost

Image generation is expensive. AI content generation could reduce costs by making artists and graphic designers more productive or in some cases by replacing them all together. No human artist would want to generate images for $0.13 per image.

The AI could be introduced in many parts of the workflow to make image generation easier, faster and less expensive. Adobe Creative Cloud, presentation applications like Google Presentations or Microsoft Powerpoint, will all include this sort of functionality, I would guess by early next year. And cost value drivers are likely to be important if there is an economic slowdown.

Optionality

One of the most fascinating aspects of AI content generation is that it allows one to rapidly generate, explore and evolve options. The design space and our ability to explore it is massively expanded. Think about the workflow:

Create a prompt

See the imagesTweak the prompt (multiple times)

Choose an imageGenerate variations of the image

Edit the image

Download the image

Use the image

One could move back and forth between steps 1, 2 and 3 many times, possibly generating 100s of images, at $0.13 each, to relatively quickly generate the image you need.

I expect to replace our current photo libraries with one of these AI content generation systems at some point next year.

In other words, for this use case AI content generation is a disruptive innovation against photo libraries.

Optionality value drivers require more experience and data to quantify, but they connect to resilience and adaptability and are slowing gaining recognition as an important part of some value models.

How would you price Dall-E and other AI content generation applications?

Open.ai’s current approach to pricing makes sense early in category creation. To review, they have two pricing models that I am aware of:

People generating images at $0.13 per image

Embedding the API in other applications at$0.16 - 0.20 per image depending on resolution

Note that the API cost is higher per image generated. This helps Open.ai keep its options open and prevent cannibalization while it works out its business model.

Over time, I expect more business focussed solutions to be built from content generation AIs. As this happens, pricing models are likely to change. There are four possibilities depending on the business value being created and how easy it is to quantify that value.

Input based pricing models, where the volume and complexity of input determines the price (Open.ai uses this for its model business today).

Output based pricing models where the price is based on an estimate of the value (this is kind of what Open.ai is doing today for Dall-E).

Outcome based pricing models, these will be used in areas like SEO and pipeline optimization. Outcome based pricing models will be used anywhere there is enough data to build causal models and quantify outcomes in a way all parties can agree on.

Revenue sharing pricing models, these are a type of outcome based model, but in this case the parties share risk and revenue over time.

These four types of pricing models are likely to become more common in AI generally, and to replace the current approaches taken by Amazon, Microsoft and Google which tend to be resource based (a fancy way of saying cost plus).

Over time, I expect some form of outcomes based pricing to win in most categories. The promise of AI is better predictions. The barrier to outcome based pricing has been the difficult of prediction and the challenge of untangling causal relations. AI should help to address both these objections.

Dall-E, ownership and bias

Ownership of images generated by an AI

Some of the revenue models are going to turn on ownership of the image generated.

On November 5, 2022 this was Open.ai’s policy.

You own the generations you create with DALL·E.

We’ve simplified our Terms of Use and you now have full ownership rights to the images you create with DALL·E — in addition to the usage rights you’ve already had to use and monetize your creations however you’d like. This update is possible due to improvements to our safety systems which minimize the ability to generate content that violates our content policy.

So you can download what ‘you’ ‘create’ and claim ownership. You could even try to sell the images, maybe as an NFT (Non Fungible Token) and become part of that bubble.

For more on the ownership of AI generated images see Who owns DALL-E images? Legal AI experts weigh in.

So far so good, but are these systems violating other forms of ownership? One of the most powerful, and engaging, ways of using Dall-E is to create a prompt ‘XYZ in the style of ABC’ (see the examples below, ‘a quiet lake in the style of Miro, Dali, Lee Ufan, Emily Carr). One of these people, Lee Ufan, is a living artist.

What if I tried a prompt ‘a fat human like pig in the style of Miyazaki, Pixar, Disney.’ Miyazaki (Studio Ghibli, Disney and Pixar are all aggressive about protecting their intellectual property and style. They are not likely to accept this use.

Bias in AI generated images

And then there is the question of bias. Dall-E seems to be much better at generating images influenced by European and American art, animation and illustration. It does less well with indigenous, Asian or Latin American themes. Perhaps this will self correct with time. But there are a lot of feedback loops built into these systems. A GAN basically structures feedback between two competing AIs. These systems tend to be what is called Barnesian performative, in other words, the more they are used the more they come to define the norm, and the range of what is considered, and what is thought possible, narrows rather than expands.

See: AI art looks way too European and the example below of the Japanese suiboku painter Sesshu.

AI content generation is a ‘brave new world’ in both Shakespeare’s sense (The Tempest) and Huxley’s (Brave New World).

Just for fun, five sets of images from Dall-E “quiet lake in the style of …”

Lee Ufan (I am impressed that the AI has Lee Ufan’s stye in its model, these are not exactly on point, but they are not bad and are my favorites of this set, but then, Lee Ufan is one of my favourite artists.)

Sesshu (to me these look nothing like the work of the iconic Japanese Suiboku artist Sesshu)

An actual work by Sesshu